This will be needlessly long‐winded, but I’m going to write about a very specific home network problem I ventured to solve a while back.

The problem

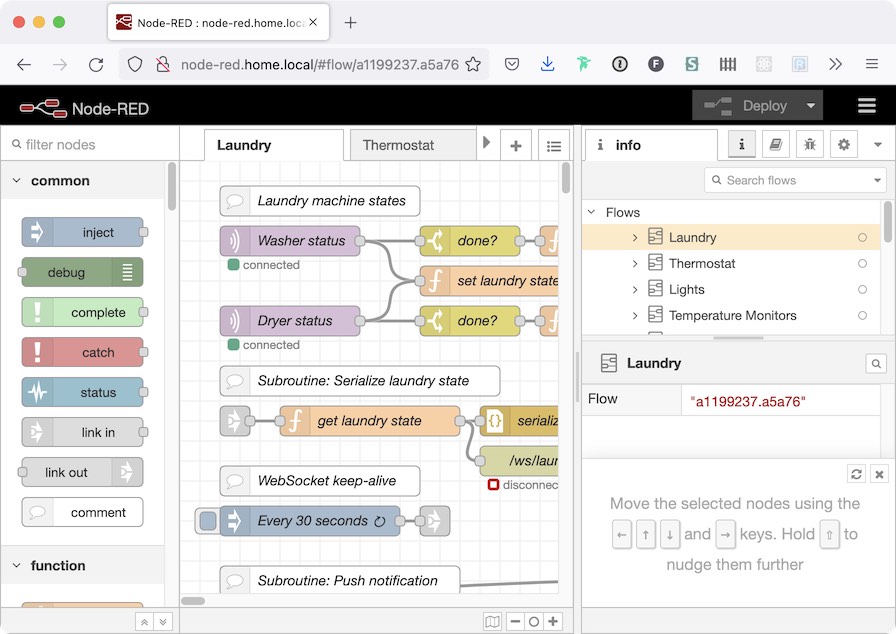

I have a Raspberry Pi in my house that functions as a home automation server. It’s on the network as home.local and has an IP address of (let’s say) 192.168.1.99. No Home Assistant — not yet, at least — but there are a handful of things I use to coordinate my home automation. Four of them have a web presence:

- Node‐RED for if‐this‐then‐that stuff and other simple logic things. The frontend runs on a configurable port number —

1880by default. Can be accessed athttp://home.local:1880. - Homebridge for acting as a HomeKit gateway to a bunch of non‐HomeKit stuff — mainly smart outlets and light bulbs that run Tasmota. Has a great web frontend that runs on a configurable port number,

8080by default. Can be accessed athttp://home.local:8080. - Zigbee2MQTT for a few Zigbee devices I have in the house. (I mainly use WiFi for my smart devices, but Zigbee’s a better fit for things that aren’t near an outlet — like battery‐powered buttons, or sensors that report data very occasionally.) Has a very useful web frontend that runs on a configurable port number,

8080by default. Can be accessed athttp://home.local:8080. - A static web site that acts as a dashboard for home automation stuff — graphing temperatures of various rooms over the last eight hours, states of various light bulbs, and so on. Really silly, and not something I consult very often, but I like keeping it around. Doesn’t have its own server, so I need to set something up.

I can configure these port numbers so that they don’t clash, but is that the limit of my imagination? I don’t care about the port that something runs on, and I don’t want to have to remember it. These things have names; I want to give them URLs that leverage those names.

I want these services to have “subdomains” of home.local. I want node-red.home.local to take me to the Node‐RED frontend. Likewise with homebridge.home.local, zigbee2mqtt.home.local, and dashboard.home.local.

“Subdomain” is in quotes because it’s not the right word here, but for now just think of them as four separate sites with four separate domain names.

How do I pull this off? Split the problem into two parts:

- First, we need

dashboard.home.localand the others to resolve to192.168.1.99just likehome.localdoes. - Then we’ve got to make the server respond with a different site for each thing based on which host name is in the URL. A web server has a name for this, and has since HTTP 1.1: these are virtual hosts.

The DNS side of this (the hard part)

This part will apply to people like me who prefer to use Multicast DNS instead of running their own DNS server to handle name resolution.

Why mDNS?

Let’s get this out of the way. Here are my arguments in favor of mDNS:

- The things I use support them out of the box. Raspberry Pis have Avahi preinstalled these days, and Raspberry Pi Imager makes it possible to set your Pi’s hostname and enable SSH during the installation process (via the hidden options panel). If you set the hostname to

fooduring installation, on first boot you’ll be able to SSH into it atfoo.localwithout having to plug it into a monitor first or figure out its IP address. - mDNS is also within the reach of embedded devices. My ESP8266s can broadcast a hostname with the built‐in

ESP8266mDNSlibrary, and can resolve mDNS hostnames with mDNSResolver. - It looks like a domain name. My brain needs that. It will never feel normal to me to type in

http://thing/foo, even if my network can resolvethingto an IP address. What the hell is that? Of course, I could add a faux‐TLD like.dev, but it doesn’t exactly feel safe to invent a TLD in this crazy future where new TLDs are introduced all the time. The.localTLD(-ish) thing is part of RFC 6762; it’s “safe” in the sense that it would be a stupid idea for ICANN to introduce a.localTLD — stupid even by ICANN’s standards.

Why not ordinary DNS?

It’s easy enough to add a DNS server to a spare Pi. Somewhat fashionable, too, judging from the popularity of Pi‐Hole. And your router can be configured to use your internal DNS server so that you don’t have to change your DNS settings on all of your network devices.

I’ll give you the short answer: mDNS is a parallel and alternative way to resolve names, but a local DNS server is a wrapper around whatever DNS server you prefer for the internet at large. I can easily set up a Pi as my DNS server and tell it to resolve what it can, delegating everything else to 8.8.8.8 or whatever. But I’ve now made the Pi the most likely point of failure, and when it does fail, all my DNS lookups will fail, not just the local ones.

When I set up a Pi‐Hole, I had two DNS outages the first day. Bad luck? Probably. I could’ve tracked down the root cause, but by definition you will only notice a DNS failure when you’re in the middle of something else.

Anyway, this is not to say that an mDNS approach is better — only that name servers are essential infrastructure for network devices, and running my own name server on a $40 credit‐card‐sized computer was not a hassle‐free experience. Maybe if I owned the house I live in, I could put ethernet into the walls and replace my mesh Wi‐Fi network with something that has fewer possible points of failure, but you go to war with the network you have.

How do subdomains work in mDNS?

They don’t.

Well, they work just fine as domain names, but they don’t have the semantics of subdomains that you’d come to expect from the DNS world. To quote the mDNS RFC:

Multicast DNS domains are not delegated from their parent domain via use of NS (Name Server) records, and there is also no concept of delegation of subdomains within a Multicast DNS domain. Just because a particular host on the network may answer queries for a particular record type with the name “example.local.” does not imply anything about whether that host will answer for the name “child.example.local.”, or indeed for other record types with the name “example.local.”.

Outside‐world DNS describes a hierarchy where foo.example.com is a subdomain of example.com, and example.com is (technically) a subdomain of com. Web browsers build features upon this implied hierarchy, such as allowing cookies set on example.com to be sent on requests for foo.example.com.

In mDNS, foo.local is not a subdomain of local. That .local is just a tag on the end meant to act as a namespace. So bar.foo.local can exist, but it’s just bar.foo with .local added on, and is not understood by mDNS to be a subdomain of foo.local. The two labels can coexist within the same network, but they could point to different machines or the same machine.

The RFC says that any UTF‐8 string (more or less) is a valid name in mDNS, and that includes the period. So I can publish homebridge.home.local and have it resolve to the same machine as home.local.

So why invent a subdomain concept where one doesn’t exist?

It feels right, I guess?

The only advantage of the “subdomain” here is in my brain and its desire to treat these as child‐sites of my server at home.local. In a minute I’ll give you a compelling reason to use a simpler system instead. But this is how I did it.

Are there any downsides to the subdomain approach?

There is a tiny downside, yes, almost too small to mention: Windows doesn’t support it at all.

Before Windows 10, to get mDNS support in Windows, you could install Bonjour Print Services for Windows. Since Windows 10, there’s built‐in mDNS support from the OS, except it’s bad.

Trying ping from the command line illustrates the problem:

C:\Users\Andrew>ping bar.local

Ping request could not find host bar.local. Please check the name and try again.

C:\Users\Andrew>ping foo.bar.local

Ping request could not find host foo.bar.local. Please check the name and try again.Here I’m pinging two names on my network, both of them nonexistent. The first one returns an error message after about two seconds; it tried to resolve bar.local and failed. The second one returns an error message immediately, as though it didn’t even try. Windows does not support mDNS resolution of names with more than one period.

This is flat‐out wrong behavior — foo.bar.local is a valid name in mDNS — but there you have it. I suspect it’s because .local has been used by Microsoft products in the past in some non‐mDNS contexts; maybe there’s a heuristic somewhere in Windows that thinks foo.bar.local is one of those usages and can’t be convinced otherwise.

When I discovered this, I attempted to disable the built‐in mDNS support and reinstall Bonjour Print Services, but I failed. Maybe there’s a brilliant way to make it work right, but I haven’t found it.

Since I have exactly one Windows machine in my house, I’m satisfied with a low‐tech workaround: the venerable hosts file. On my one Windows machine, all subdomain‐style mDNS aliases go in there:

192.168.1.99 dashboard.home.local

192.168.1.99 homebridge.home.local…and so on. Keeping this file updated when I add aliases is barely a chore; it’s not like there’s a new alias every week.

So this isn’t a deal‐breaker for me. But if it’s one for you, there’s an easy workaround: don’t use subdomains. Instead of dashboard.home.local, do dashboard-home.local, or just dashboard.local if you prefer simplicity. As long as it has exactly one . in it, Windows handles it fine.

How do I broadcast extra names?

OK, all that’s out of the way. Back to the problem: we want dashboard.home.local and the rest to resolve to the same IP address as home.local. How hard could that be?

Red herring: /etc/avahi/hosts

On Linux, Avahi is in charge of broadcasting the Pi’s hostname as [hostname].local. Could it broadcast the other names we want? Let’s dig into its config directory… aha! There’s a file called /etc/avahi/hosts!

Quoth its man page:

The file format is similar to the one of /etc/hosts: on each line an IP address and the corresponding host name. The host names should be in FQDN form, i.e. with appended .local suffix.

Couldn’t be simpler. So we just need to put them into this file, right?

I’ll save you the trouble of trying. To illustrate the problem more directly, let’s use the avahi-publish utility to try to broadcast an mDNS name:

sudo apt install avahi-utils

avahi-publish -a foo.home.local 192.168.1.99We get the response:

Failed to add address: Local name collisionThis happens because, by default, Avahi expects each IP address to have exactly one name on the network. Just as it says “home.local resolves to 192.168.1.99,” it wants to be able to say “192.168.1.99 is called home.local” without ambiguity. Since this IP address already has a name, it won’t let us add a second — unless we add the --no-reverse (or -R) parameter:

avahi-publish -a foo.home.local -R 192.168.1.99Now we get what we wanted:

Established under name 'foo.home.local'So if I can do it with avahi-publish, I can do it in /etc/avahi/hosts, right? Well, no. There’s no way to specify the no‐reverse option within the hosts file — the downside to imitating the simplicity of /etc/hosts.

Maybe it’ll behave differently in the future, but for now we’ll have to publish these aliases a different way. If we can’t just put it into the config file, we’ll have to write a startup script and make a systemd service out of it.

Script Option 1: avahi‐publish

Now that we know about avahi-publish, the obvious approach would be to write a script that looks like this:

#!/bin/bash

/usr/bin/avahi-publish -a homebridge.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a node-red.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a zigbee2mqtt.home.local -R 192.168.1.99 &(Wait, am I writing a Bash script in public? I’ve had nightmares like this.)

Did you notice that avahi-publish is still running from earlier, and will run indefinitely until we terminate the process with ^C or the like? That’s why those ampersands are needed in the script — they’ll fork the process and run in the background.

This will work fine as a one‐shot script, but not as a daemon. We want this script to run indefinitely and to clean up those child processes when the daemon is stopped.

Let’s make it wait indefinitely:

#!/bin/bash

/usr/bin/avahi-publish -a homebridge.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a node-red.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a zigbee2mqtt.home.local -R 192.168.1.99 &

while true; do sleep 10000; done(This is ugly, but portable. sleep infinity works just fine on Linux, but not on macOS.)

We’re getting somewhere, but I think we need to do something else. We’re creating one new child process for each alias we’re publishing, and I’m kinda sure that those processes will stick around if this script terminates. Let’s trap SIGTERM and make sure that those child processes also get terminated:

#!/bin/bash

function _term {

pkill -P $$

}

trap _term SIGTERM

/usr/bin/avahi-publish -a homebridge.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a node-red.home.local -R 192.168.1.99 &

/usr/bin/avahi-publish -a zigbee2mqtt.home.local -R 192.168.1.99 &

while true; do sleep 10000; donePlaying around with this, I’m pretty sure it’ll do the right thing, and will daemonize nicely when we run it later on. If you were satisfied with this, you could save it to someplace like /home/pi/scripts/publish-mdns-aliases.sh and skip down to the systemd section, but I think you should keep reading.

I fumbled my way though the writing of that Bash script, but I would prefer not to have to manage these child processes at all. I’d like a setup where I create only one process no matter how many aliases I’m publishing.

Script Option 2: Python’s mdns‐publisher

Hey, there’s a Python package that does what we want! Let’s install it.

pip install mdns-publisher

which mdns-publish-cnameOn my machine (Raspbian 11, or bullseye), that works just fine and outputs /home/pi/.local/bin/mdns-publish-cname. If which doesn’t find it, you might need to add PATH="$HOME/.local/bin:$PATH" to .profile or .bashrc. Or, if you’d rather install it globally, run sudo pip install mdns-publisher instead and which will return /usr/local/bin/mdns-publish-cname.

The mdns-publish-cname binary is great because it accepts any number of aliases as arguments. Run it yourself and see:

mdns-publish-cname dashboard.home.local homebridge.home.local node-red.home.local zigbee2mqtt.home.localAll four of those hostnames should now respond to ping.

Perfect! It does just what we want in a single process. And it assumes we want to publish these aliases for ourselves, rather than for another machine, so we don’t even need to hard‐code an IP address.

To me, this is clearly superior to Option 1. Sure, I had to install a pip package first, but I had to install avahi-utils via APT before I could use avahi-publish, so I think that’s a wash.

Let’s daemonize it and get the hell out of here

Not satisfied at having solved our problem with elegance, the mdns‐publisher repo also includes a sample systemd service file that we’ll use as a starting point for our own.

There are a few lines worth reflecting on:

After=network.target avahi-daemon.serviceThis is important because we can’t publish aliases if Avahi hasn’t started yet.

If you went with Script Option 1, there’s another thing to take care of: the page we cribbed this from says it needs to restart if Avahi itself restarts. To make that happen, you’d need one extra line in the [Unit] section:

PartOf=avahi-daemon.serviceIf you’re using Script Option 2, this doesn’t seem to be necessary. If I restart Avahi while I monitor the mdns‐publisher output, I can see that mdns-publish-cname somehow knows to republish the aliases.

Restart=noI’d usually go with on-failure in my service files, but maybe no is OK here. I think it depends on whether the daemon would exit non‐successfully for intermittent reasons, or for a reason that’s likely to persist. If it’s the latter, you’ll just end up in a restart loop that you’d have to manage with other systemd options like StartLimitBurst. I’ll keep this as‐is.

ExecStart=/usr/bin/mdns-publish-cname --ttl 20 vhost1.local vhost2.localYou’ll need to point this to /home/pi/.local/bin/mdns-publish-cname, or /usr/local/bin/mdns-publish-cname if you installed the package with sudo. If you do the former, you’ll want to set User=pi instead of User=nobody, because the nobody user won’t be able to run a binary located inside your pi user’s home folder.

Of course, you’ll want to change vhost1.local vhost2.local to the actual aliases you want to publish. But instead of doing that, let’s go one step further:

Aliases in a config file

It feels more intuitive to me to put my aliases inside a config file. After all, a Pi’s primary hostname isn’t specified in the text of some systemd unit config file; it lives at /etc/hostname.

So I created /home/pi/.mdns-aliases for keeping a list of aliases, one per line:

dashboard.home.local

node-red.home.local

homebridge.home.local

zigbee2mqtt.home.localI then wrote a Python script to read from that list:

#!/usr/bin/env python

import os

args = ['mdns-publish-cname']

with open('/home/pi/.mdns-aliases', 'r') as f:

for line in f.readlines():

line = line.strip()

if line:

args.append(line.strip())

os.execv('/home/pi/.local/bin/mdns-publish-cname', args)We read each line, strip whitespace, toss out blank lines (like if I inadvertently add a newline to the end), and pass along the rest as arguments to mdns-publish-cname. The os.execv call works like exec in the shell: it replaces the current process with the one specified. That’s perfect for our purposes.

Save that script somewhere (I saved mine at /home/pi/scripts/publish-mdns-aliases.py), make it executable with chmod +x, and run it as a test. Make sure the output is what you expect. Make sure that you can ping each of your aliases while this script is running, but then be unable to ping them once you quit the script with ^C.

Now we can simplify the service file:

[Unit]

Description=Avahi/mDNS CNAME publisher

After=network.target avahi-daemon.service

[Service]

User=pi

Type=simple

WorkingDirectory=/home/pi

ExecStart=/home/pi/scripts/publish-mdns-aliases.py

Restart=no

PrivateTmp=true

PrivateDevices=true

[Install]

WantedBy=multi-user.targetSave it in your home directory as mdns-publisher.service, then run:

chmod 755 mdns-publisher.service

sudo chown root:root mdns-publisher.service

sudo mv mdns-publisher.service /etc/systemd/system

sudo systemctl enable mdns-publisherNow we’ll start it up and monitor its output:

sudo systemctl start mdns-publisher.service && sudo journalctl -u mdns-publisher -fThe output should look quite similar to what you saw earlier when you ran the script directly.

We’re more than half done

I promise that was the hard part. Now that we’ve got a persistent way to give our Pi more than one mDNS name, we can move onto the other half of this task.

The web server side of this (the easy part)

If I type node-red.home.local into my browser’s address bar, what do I want to happen?

- It will resolve

node-red.home.localto192.168.1.99. (We just proved it.) - That request should hit port 80 of my home server.

- The response from the server should be identical to what it would be if I’d typed in

home.local:1880.

This is more or less a reverse proxy, so let’s attempt to handle this with nginx.

sudo apt install nginx

cd /etc/nginx/sites-availableProxy a web server running on a specific port

Nginx allows modular per‐site configuration in a similar way to Apache: inside its config directory there are directories called sites-available and sites-enabled. You can define a config file in sites-available, then symlink it into sites-enabled to enable it.

sudo nano node-red.confNow we’ll look at the nginx documentation for a few minutes and throw something together. No, I’m kidding; that’s a very funny joke. In truth, we’ll google “nginx proxy config file example” or something like that, click on results until we find something that’s close to what we want, then tweak it until it’s exactly what we want.

server {

listen 80; # for IPv4

listen [::]:80; # for IPv6

server_name node-red.home.local;

access_log /var/log/nginx/node-red.access.log;

location / {

proxy_pass http://home.local:1880;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_cache_bypass 1;

proxy_no_cache 1;

port_in_redirect on;

}

}Let’s call out some stuff:

-

The

server_namedirective means that thisserverblock should only apply when theHostrequest header matchesnode-red.home.local. -

location /will match any request for this host, since it’s the onlylocationdirective in the file. Every path starts with/, and nginx will use whichever block has the longest matching prefix. -

proxy_passwill transparently serve the contents of its URL without a redirect. -

We use

proxy_set_headerto make sure that the software serving up the proxied site sees aHostheader ofnode-red.home.local; if we omitted this, it’d see aHostheader ofhome.local. This one is tricky; it may not always be necesssary, and in some cases might even break stuff.Remember that you’re forwarding to a different web server in this example. Consider what that web server would expect to see, if anything, and whether it would behave incorrectly if

Hostwere different from its expectation.Node‐RED seems to serve everything up with absolute or relative URLs, and therefore doesn’t need to care about its own hostname. I’m leaving this line in because it’s easier to keep it around (and comment it out if you don’t need it) than to have to look it up in the cases where you do need it.

-

I use Node‐RED to make some data available via WebSockets, so we also have to make sure nginx can handle those requests. First, we make a point to pass along any

UpgradeandConnectionheaders; by default they wouldn’t survive to the next “hop” in the chain, but WebSockets use those headers to switch protocols. We skip the proxy cache because Google told me to.

Anything I didn’t explain just now is something I don’t fully understand but am too nervous to remove just in case it’s important.

Anyway, let’s save this file and return to the shell.

Now we create our symbolic link:

cd ../sites-enabled

ln -s ../sites-available/node-red.conf .Run an ls -l for sanity’s sake and make sure you see your symlinked node-red.conf. If you’re really paranoid, run cat node-red.conf and make sure you see the contents of the file.

Now we’ll restart nginx and monitor the startup log:

sudo systemctl restart nginx && sudo journalctl -u nginx -fYou want to watch the logs after you enable a site because, if you’re like me, you will have screwed up the config somehow, even if you directly copied and pasted it from someplace and barely changed it. If nginx can’t parse the config file, it’ll explain what you did wrong.

Did it work? Type node-red.home.local into your address bar and find out:

Beautiful. Buoyed by this success, we’ll create similar files in sites-available called homebridge.conf and zigbee2mqtt.conf, and point them to their corresponding ports.

Serve up some flat files

The last one is a hell of a lot easier than this: my dashboard site is just a JAMstack (ugh) app that requires nothing more complicated from the server than the ability to serve static files. So here’s what dashboard.conf looks like:

server {

listen 80;

listen [::]:80;

server_name dashboard.home.local;

root /home/pi/dashboard;

index index.html;

}Of course, make sure that root points to the actual place where your flat files can be found. That should take care of it. If you use something like create-react-app to build and deploy these flat files, it’ll just work as‐is.

I eschew “bare metal,” Andrew

I won’t do a deep‐dive here, but Traefik Proxy is a good option if you prefer to containerize things. If you were already running these various services in different Docker containers, you could map them to the URLs you want by adding labels like traefik.http.routers.router0.rule=Host(`node-red.home.local`) to those containers. Traefik will handle the rest.

I use Traefik on my other Pi server, the one that handles tasks other than home automation. After some experience with both approaches, I’ve decided that containerization involves an equal number of pain points, but in new and interesting places.

Coming in part two

No, that’s a joke. This is a one‐parter. I wrote this down mainly so I can refer back to it in a couple years after I’ve forgotten all this stuff, but if you’ve made it this far, you probably also found it helpful or interesting. Let’s see if I can’t write a few more things like that.

Comments

Andrew, when are we getting a new release of prototypejs? The github repo seems dead.

Thanks

We aren’t! Feel free to fork it and call it something else if you like.

Thanks for writing this, it helped me a lot for my current setup. Just a question:

After

avahi-publishmy subdomain, I was able tocurlandpinglocally within the host server (the one that’s running avahi).However, when trying to

curlorpingfrom other machines it fails to resolve:Did this happen to you at all?

Austin, that didn’t happen to me. I just tried this on my local network to make sure — from my home server I was able to run this:

And ping it from another computer:

The only hiccups I encountered while getting this working locally were sporadically caused by my Eero mesh network — annoying, but soft-resetting each access point fixes that issue. If mDNS works under normal circumstances on your home network, I can’t think of any obvious culprits.

Thanks for letting me know. I double checked on other machines it turned out that only my Pop OS device could not resolve the subdomains.

Testing on MacOS and Android devices work flawlessly.

I solved this by installing

sudo apt install libnss-resolvein the client device. Modify/etc/nsswitch.confto usemsdninstead ofmsdn_minimaland now everything works!Thanks Andrew for this great write-up, it really helped clear up some misunderstanding about mDNS!

For my setup I have gone with Docker containers with Traefik reverse proxy along with traefik-avahi-helper to automatically publish the domains.

I found the same issue,

app-server.localworked butapp.server.localfailed. I found what seems to be a known issue relating to mDNS ‘subdomains’ on Linux.My solution was to configure my OpenWRT router’s DHCP to force an IP an address for my local server domain. e.g.

After this everything worked as expected. Hope this might help…

In reply to my own comment about ‘subdomains’, configuring the router DHCP DNS results in bypassing mDNS to resolve the domain and although a workable solution it is not advised to mix them.

Further investigation found that where mDNS on Linux is provided by nss-mdns there is an intentional two-label limit by default.

From nss-mdns#etcmdnsallow:

So it seems rather than installing

nss-resolvethe better solution is to configure nss-mdns with the following two steps:/etc/mdns.allowwith the following contents:mdns4to the end of the hosts line in/etc/nsswitch.confto read the config since the minimal versionmdns4_minimalignores the above config.This is all clarified nicely in an mDNS answer on AskUbuntu.

Thanks, Calum. That AskUbuntu answer creates more mysteries for me, because it implies that my experience is an outlier, and that most OSes don’t support this at all. It suggests that macOS doesn’t support

foo.bar.local, yet there are several Mac laptops and mobile devices in my household that tolerate such hostnames.And since my Raspberry Pi machines resolve

foo.bar.localjust fine without having needed any special configuration, I can only assume that they’re not usingnss-mdns, and that there is a different way of resolving mDNS hostnames that does not requirenss.Awesome post, exactly what I was looking for in my home server setup.

On Ubuntu 22.04 I’ve tried both mdns-publisher and traefik-avahi-helper – and they both fail.

The mdns-publish-cname says:

INFO: All CNAMEs published

And hangs up. By ctrl-c I get ^CINFO: Exiting on SIG_SETMASK… , nothing goes out.

The helper says:

docker logs 26d8d5ca3595

[ ‘traefik.local’, ‘yacht.local’ ]

starting cname.py

INFO: All CNAMEs published

And nothing happens – the hosts are not available.

Only the bash script works :-/

I don’t know if it still works but a long time ago i wrote https://gitlab.com/cqexbesd/avahi_cnamed to solve this problem for me. I don’t use it anymore as everything has moved to systemd but if you are lucky it might help. Maybe not.

I’ve used the original pi3g.com script and daemonized it by your recipe.

So far, it works.

In order not to do Python and run in a container, I wrote a lightweight daemon that does exactly what is described in the article. Maybe it will be useful for someone else too.

https://github.com/grishy/go-avahi-cname

Thanks SO much! I can’t believe how many sites I had to go through before finding this. It seems like such a basic need, and the number of responses suggest lots of other people think so too.

Hi Andrew, will you marry me? ??

No, for real, thanks so much for this, it saved me a lot of research and I love all the humour you sprinkled in there. If only all of the internet were a little more like you.

Hey, this is really great! One question though …

You mentioned this:

“The mdns-publish-cname binary is great because it accepts any number of aliases as arguments. … And it assumes we want to publish these aliases for ourselves, rather than for another machine, so we don’t even need to hard?code an IP address.”

If I do want to point the alias to another machine, how do I do that? Is that something I should do with the mdns-publish-cname command? Or in the nginx reverse proxy?

/etc/avahi/hostscan do that for you — the drawbacks discussed in the article aren’t drawbacks if you’re broadcasting aliases on behalf of other machines. If those machines already have names and Avahi complains, then a script based onavahi-publishshould do the trick.Thanks! My idea was to put all of these in a central location (say, my router, since it’s running Debian) and not on each device … will try it out!

One question though before I go that route – are there any downsides to doing that instead of having each machine advertise their own hostname or sets of hostnames?

Hi, I’m reading Your article and I’m asking to myself if there’s a way to reach a domotic server which run an instance of nodejs application reachable via websocket behind a router with public ip, without opening port on the routers. I’m quite sure that it use port 443 for secure connection with original app, and with Wireshark I can see some packets when using original vendor app. Actually I’m trying to use something similar to home assistant to reach it and locally works fine. I’m in need to reach this server also when I place it in another location, such as sea house, but there I haven’t access to the router admin and if I attach it directly to the router port the vendor app can reach it. I would like to know if this is possible with my python script that as said, work fine if I run it in .local lan. Thanks for anyone got time to reply me. And sorry for English grammar mistakes. Best regards and merry Xmas to all. Carlo

for the docker setups out there:

(i did not try it yet myself)

if you use for the automatic nginx prox

you can try

https://github.com/hardillb/nginx-proxy-avahi-helper

for the mdns part.

Ggg

I went with setting up my own DNS server so that I could run PFblockerNG. For me, this use-case was quite compelling.

Andrew, I’ve been reading up on mDNS and is one of the better articles that I have come across.

One thing that none of the mDNS articles ever discuss is how do the mDNS and DNS-SD services behave in the presence of a DNS server residing on their lan?

DNS stores records and responds to queries, but it doesn’t advertise what nodes and their services that are available.

Thanks a lot for this! I’ve been struggling to get this working for days, and this article helped pushed me in the right direction in a lot of ways. I didn’t go with option 2 because my pi because I haven’t yet tinkered with python, but tbh I was quite happy having the bash script with 2 extra aliases I needed!

Cool, now we just need a trick to make letsencrypt certificates for these local services.. ?

This feels like the wrong solution for a number of reasons; for one,

.localhostnames will vary by definition from network to network.I don’t feel any need to use HTTPS on my local intranet, though I certainly understand the impulse to minimize attack surface area if other systems have failed (like your Wi-Fi security). In my case, all the services I host in this manner either (a) contain no sensitive information or (b) have their own authentication system even when accessed locally.

This StackOverflow answer and the associated discussion wade into the debate over whether it’s worth anything to have encryption without trust. If your answer is “yes,” you can get away with self-signed certificates. If your answer is “no,” then a self-signed certificate authority seems to be worth doing, and the answer itself contains the instructions for doing it.

Here’s what I came up with for publishing hosts from a Kubernetes pod:

Hopefully someone else can make use of this ?

Using this information I ended up forking

mdns-publisherto add support for A records (basically the same trick asavahi-publish -a -R) and then also forkingtraefik-avahi-helper(which usesmdns-publisher) to support that fork. As usual, hours of work to avoid hard-coding a 3 line file, but it feels real classy to spin up a new docker compose file and have the service automagically get a name.Thanks for pointing out the -R flag in avahi-publish! The man page on my system doesn’t show it but the command accepts it. I was driving me mad trying to get /etc/avahi/hosts working. The documentation for avahi is really lacking. The avahi.hosts man page does not make it clear that you should not try to do this in the hosts file, and the avahi-daemon doesn’t complain about not being able to use those entries.

Hi Andrew, this is just a compliment for your writing style. I really like the way you manage to describe heavy technical stuff in a really lightweight manner. Impressive. I like the contents too, BTW, that’s why I found this article.

Thanks !